Morphological intelligence related to physical characteristics, an autonomous vehicle like the Lunar Rover can negotiate rough terrain by virtue of its suspension and drive systems, it doesn't need to learn how. Swarm intelligence is found in insects and birds, and is non-hierarchical, each individual acting to support the swarm as needed. Individual intelligence includes those things that the individual learns by themselves and social intelligence that learned from others. Using this four-dimensional model most plants, simple organisms, robots and animals only show intelligence in two dimensions at the most, whereas humans occupy the four-dimensional space.

When the possibility of Artificial Intelligence (AI) was first considered it was believed that tasks such as game playing would be difficult to master. However these activities have a well-defined environment, (the rules), and given sufficient computing power can be solved by evaluating all possible moves, if nothing else. Relatively simple tasks, (for a human), like making a pot of tea prove to be harder to solve, (particularly if it is in someone else's kitchen!), the environment being poorly defined and subject to variation. What is needed here is Artificial General Intelligence (AGI).

If an AI system has the ability to learn from solving one problem and uses that knowledge to solve a different type of problem then it can be said to demonstrate AGI. There are three methods that have been proposed to achieve AGI, design, evolution and reverse engineering from animals. This later method was considered to be too much in the realms of science fiction to be taken seriously.

Some success had been achieved in demonstrating robotic evolution. A computer model of an arrangement of motorised limbs was allowed to mutate new designs, which were judged by their ability to move. Good designs were allowed to 'breed' and mutate in turn and the best designs were made into operating mechanisms. An interesting observation was that all these designs exhibited bi-lateral symmetry, even though that had not been part of the 'breed' selection criteria.

Is evolution a viable method of achieving AGI? Does the process require too much energy? The human animal has needed somewhere between 8 x 1021 J and 6 x 1030 J, (the upper limit set by the energy received from the Sun) to evolve and has developed a brain with around 1014 synapses. At the other end of the scale the nematode c.elegans has had the whole of its genome sequenced and its neuronal network mapped, (302 neurons and about 5,000 synapses). The Kleiber's Law has empirically determined that for many animals their metabolic rate is proportionate to the ¾ power of the animal's mass, which gives some indication of how energy consumption and intelligence might relate.

During the talk several short video clips were shown, from the human appearance-like 'Actroid' down to small 'swarming' models and the anthropomimetic robot that Prof. Owen Holland and his team had developed. This was unusual in that replicated the structure of humans, not just their external appearance.

Prof. Winfield spoke about the work of his own team in developing what they called a Consequence Engine. Their robots updated an internal model of their environment every half-second and attempted to work out the consequences of any proposed action, changing that action if the consequences weren't 'good'. Video clips were shown of the model robot avoiding other models as it attempted to negotiate a corridor. Such models were able to try and implement Issac Asimov's 'First Rule of Robotics' - “A robot may not injure a human being or, through inaction, allow a human being to come to harm.” The model robot using the consequence engine was shown to divert from its task to deflect other 'human' model robots from reaching forbidden 'holes' in the test corridor. Adding a second 'human' created a dilemma, which 'human' to save? Unfortunately this meant that half the time no-one was saved!

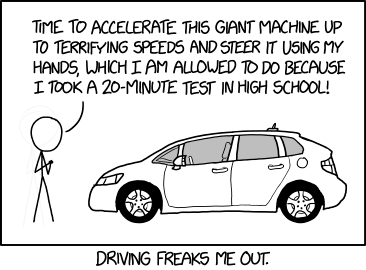

As was to be expected from such a thought-provoking talk quite a few interesting questions were raised, several relating to 'driverless cars' and whether or not there was a theory of intelligence or not.

The talk certainly gave one a lot to think about. It is many years since I studied robotics and one thing that hasn't changed is the difference between the academic and the commercial approach. Academe wanting to develop a theory first, while the commercial world moves directly to a solution to a specific problem. (I recall a 'snake' robot that had multiple vertical axis joints to allow it to weld the inside of pipes that didn't fit at all well within the academics classification scheme).

Will it ever be possible to produce a robot with AGI? I got the impression that Prof. Winfield thought it was too difficult. Part of that difficulty is the academic desire, (no bad thing), to define what is meant by AGI. Perhaps to have AGI a robot must be able 'to set the question', not just 'answer the question'. Robots can solve Rubrik's Cube; a child, not knowing what 'the problem' is might set themselves the task of forming a cross-shaped pattern on each face. A computer can solve a permutation problem as given whereas a human realises that the inverse problem, (1-c), might well be easier to solve. Both of these require a novel approach, independent of any foreknowledge.

Links.

Actroid – Humanoid Robot

Golem Evolutionary Robots

The Anthropomimetic Principle – Paper by Owen Holland & Rob Knight

ECCEROBOT